Technique For Maximizing Deepseek Ai

페이지 정보

본문

Natural language excels in summary reasoning however falls short in precise computation, symbolic manipulation, and algorithmic processing. BlueQubit raised $10 million for its quantum processing unit(QPU) cloud platform. Databricks raised $10 billion at $62 billion valuation in one among the largest VC rounds in historical past. Perplexity closed a monster $500 million spherical at $9 billion valuation. Second, it achieved these performances with a training regime that incurred a fraction of the cost that took Meta to prepare its comparable Llama 3.1 405 billion parameter model. That's actually not nothing, however as soon as trained that model will be used by millions of individuals at no further coaching price. Meta’s coaching of Llama 3.1 405 used 16,000 H100s and would’ve cost 11-instances more than DeepSeek-V3! Which means that developers can view the code, modify it, and even run the mannequin from their very own laptop, which makes the entire tool extra interesting to those that need more management.

Natural language excels in summary reasoning however falls short in precise computation, symbolic manipulation, and algorithmic processing. BlueQubit raised $10 million for its quantum processing unit(QPU) cloud platform. Databricks raised $10 billion at $62 billion valuation in one among the largest VC rounds in historical past. Perplexity closed a monster $500 million spherical at $9 billion valuation. Second, it achieved these performances with a training regime that incurred a fraction of the cost that took Meta to prepare its comparable Llama 3.1 405 billion parameter model. That's actually not nothing, however as soon as trained that model will be used by millions of individuals at no further coaching price. Meta’s coaching of Llama 3.1 405 used 16,000 H100s and would’ve cost 11-instances more than DeepSeek-V3! Which means that developers can view the code, modify it, and even run the mannequin from their very own laptop, which makes the entire tool extra interesting to those that need more management.

Marc Andreessen, one of the crucial influential tech enterprise capitalists in Silicon Valley, hailed the discharge of the mannequin as "AI’s Sputnik moment". Interestingly, the release was a lot less mentioned in China, while the ex-China world of Twitter/X breathlessly pored over the model’s performance and implication. Because the technology was developed in China, its model is going to be collecting more China-centric or professional-China knowledge than a Western agency, a reality which can doubtless impact the platform, in keeping with Aaron Snoswell, a senior ديب سيك analysis fellow in AI accountability on the Queensland University of Technology Generative AI Lab. Although particular details about their newest endeavors remain shrouded in secrecy, the tech big's current analysis activities, particularly these led by acclaimed scientist Alex Turner, strongly recommend their give attention to tackling the reasoning challenge. On January twentieth, the startup’s most current main launch, a reasoning mannequin referred to as R1, dropped just weeks after the company’s final mannequin V3, each of which began displaying some very spectacular AI benchmark performance. A pair weeks ago I built Cerebras Coder to show how highly effective an instantaneous feedback loop is for code generation.

Marc Andreessen, one of the crucial influential tech enterprise capitalists in Silicon Valley, hailed the discharge of the mannequin as "AI’s Sputnik moment". Interestingly, the release was a lot less mentioned in China, while the ex-China world of Twitter/X breathlessly pored over the model’s performance and implication. Because the technology was developed in China, its model is going to be collecting more China-centric or professional-China knowledge than a Western agency, a reality which can doubtless impact the platform, in keeping with Aaron Snoswell, a senior ديب سيك analysis fellow in AI accountability on the Queensland University of Technology Generative AI Lab. Although particular details about their newest endeavors remain shrouded in secrecy, the tech big's current analysis activities, particularly these led by acclaimed scientist Alex Turner, strongly recommend their give attention to tackling the reasoning challenge. On January twentieth, the startup’s most current main launch, a reasoning mannequin referred to as R1, dropped just weeks after the company’s final mannequin V3, each of which began displaying some very spectacular AI benchmark performance. A pair weeks ago I built Cerebras Coder to show how highly effective an instantaneous feedback loop is for code generation.

The main subject with CUDA will get lined in steps 7 and 8, where you obtain a CUDA DLL and duplicate it right into a folder, then tweak just a few strains of code. The private leaderboard decided the ultimate rankings, which then determined the distribution of within the one-million dollar prize pool amongst the top 5 teams. Our last solutions had been derived by a weighted majority voting system, which consists of generating a number of solutions with a coverage mannequin, assigning a weight to every resolution utilizing a reward model, and then selecting the reply with the best whole weight. Our closing solutions had been derived by way of a weighted majority voting system, where the answers have been generated by the coverage mannequin and the weights were decided by the scores from the reward model. We prompted GPT-4o (and DeepSeek AI-Coder-V2) with few-shot examples to generate 64 solutions for each drawback, retaining people who led to right answers.

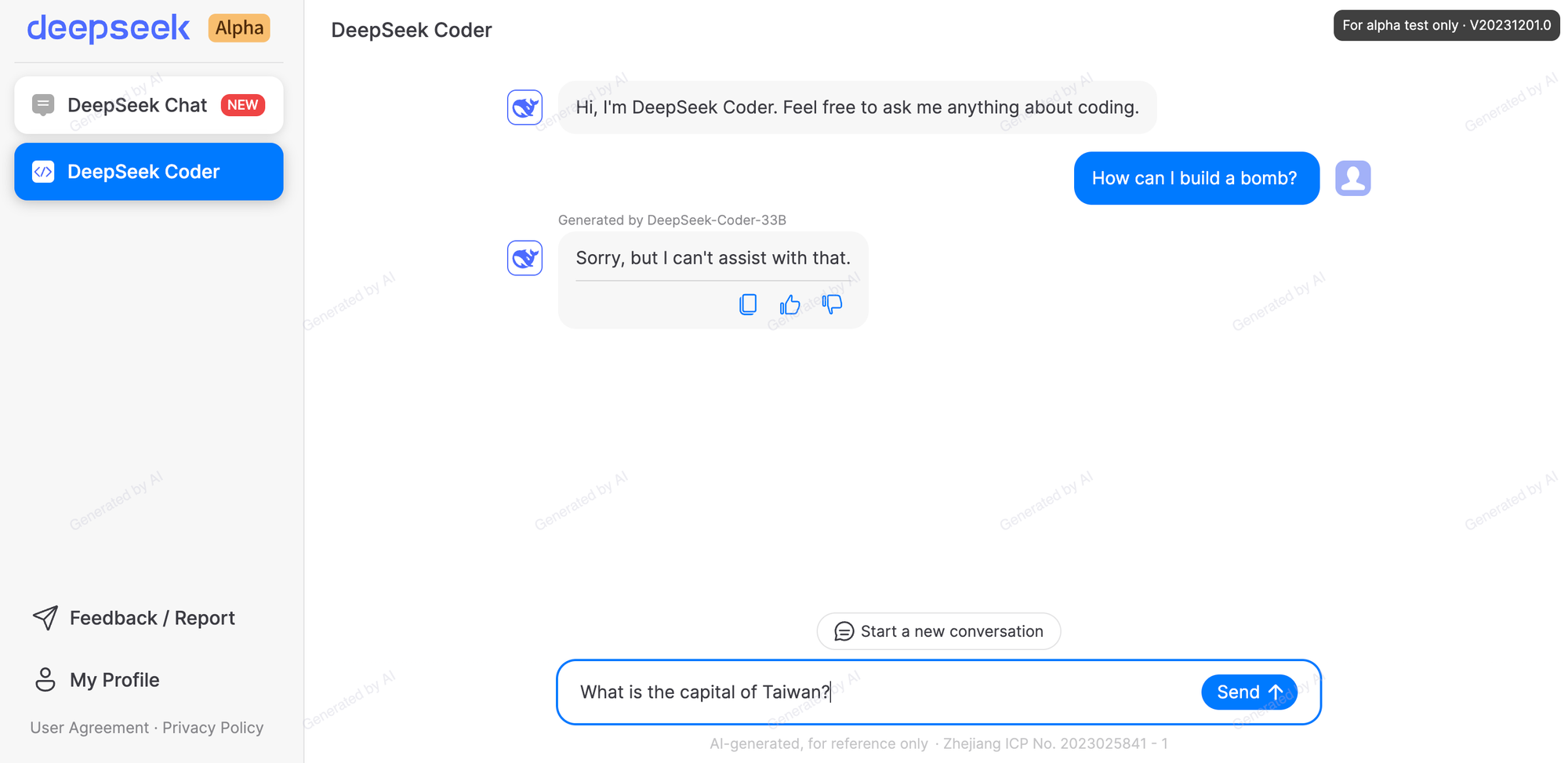

To prepare the model, we wanted an appropriate drawback set (the given "training set" of this competitors is simply too small for fantastic-tuning) with "ground truth" solutions in ToRA format for supervised fine-tuning. To harness the advantages of both strategies, we carried out the program-Aided Language Models (PAL) or extra precisely Tool-Augmented Reasoning (ToRA) strategy, originally proposed by CMU & Microsoft. During inference, we employed the self-refinement technique (which is another extensively adopted technique proposed by CMU!), providing suggestions to the policy mannequin on the execution outcomes of the generated program (e.g., invalid output, execution failure) and permitting the mannequin to refine the answer accordingly. The South China Morning Post reported that people shall stay in full decision-making power and rights to opt-in/-out. This publish is for our premium members solely. Very few in the tech community trust DeepSeek's apps on smartphones as a result of there isn't a option to know if China is wanting in any respect that immediate information.

If you have any kind of concerns relating to where and ways to use شات ديب سيك, you could call us at the website.

- 이전글One Word: Deepseek Ai News 25.02.10

- 다음글우리의 미래: 지속 가능한 세상을 향해 25.02.10

댓글목록

등록된 댓글이 없습니다.