Learning web Development: A Love-Hate Relationship

페이지 정보

본문

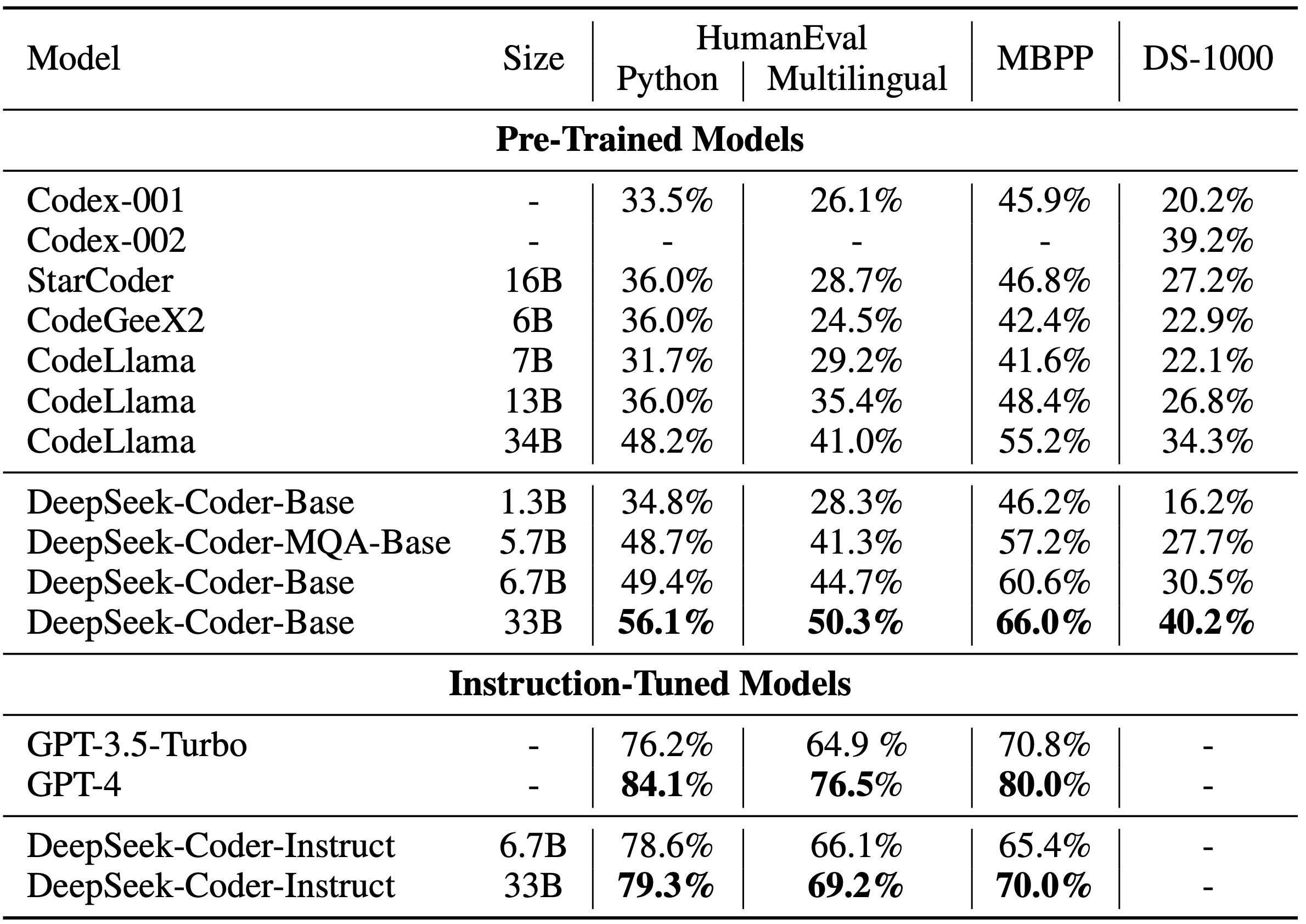

Each mannequin is a decoder-solely Transformer, incorporating Rotary Position Embedding (RoPE) Notably, the DeepSeek 33B mannequin integrates Grouped-Query-Attention (GQA) as described by Su et al. Models developed for this challenge have to be portable as nicely - mannequin sizes can’t exceed 50 million parameters. Finally, the replace rule is the parameter update from PPO that maximizes the reward metrics in the present batch of information (PPO is on-policy, which suggests the parameters are only updated with the present batch of immediate-generation pairs). Base Models: 7 billion parameters and 67 billion parameters, focusing on general language duties. Incorporated expert models for numerous reasoning duties. GRPO is designed to boost the model's mathematical reasoning talents whereas also bettering its memory utilization, making it more efficient. Approximate supervised distance estimation: "participants are required to develop novel methods for estimating distances to maritime navigational aids while concurrently detecting them in images," the competitors organizers write. There's one other evident trend, the price of LLMs going down whereas the pace of era going up, sustaining or barely bettering the efficiency throughout totally different evals. What they did: They initialize their setup by randomly sampling from a pool of protein sequence candidates and selecting a pair that have excessive fitness and low editing distance, then encourage LLMs to generate a brand new candidate from either mutation or crossover.

Moving ahead, integrating LLM-based optimization into realworld experimental pipelines can speed up directed evolution experiments, permitting for more environment friendly exploration of the protein sequence area," they write. For more tutorials and ideas, take a look at their documentation. This put up was extra around understanding some fundamental concepts, I’ll not take this learning for a spin and try out deepseek-coder model. deepseek ai china-Coder Base: Pre-skilled models aimed toward coding tasks. This improvement turns into notably evident in the more challenging subsets of tasks. If we get this right, everybody shall be ready to attain more and exercise extra of their own company over their very own intellectual world. But beneath all of this I've a way of lurking horror - AI systems have received so useful that the factor that may set humans apart from each other is just not particular arduous-won expertise for using AI systems, however quite just having a high level of curiosity and agency. One example: It's important you understand that you're a divine being despatched to help these individuals with their issues. Do you know why people nonetheless massively use "create-react-app"?

Moving ahead, integrating LLM-based optimization into realworld experimental pipelines can speed up directed evolution experiments, permitting for more environment friendly exploration of the protein sequence area," they write. For more tutorials and ideas, take a look at their documentation. This put up was extra around understanding some fundamental concepts, I’ll not take this learning for a spin and try out deepseek-coder model. deepseek ai china-Coder Base: Pre-skilled models aimed toward coding tasks. This improvement turns into notably evident in the more challenging subsets of tasks. If we get this right, everybody shall be ready to attain more and exercise extra of their own company over their very own intellectual world. But beneath all of this I've a way of lurking horror - AI systems have received so useful that the factor that may set humans apart from each other is just not particular arduous-won expertise for using AI systems, however quite just having a high level of curiosity and agency. One example: It's important you understand that you're a divine being despatched to help these individuals with their issues. Do you know why people nonetheless massively use "create-react-app"?

I don't really understand how occasions are working, and it turns out that I needed to subscribe to occasions in an effort to ship the related occasions that trigerred in the Slack APP to my callback API. Instead of merely passing in the present file, the dependent files within repository are parsed. The models are roughly based on Facebook’s LLaMa household of fashions, although they’ve changed the cosine studying fee scheduler with a multi-step learning charge scheduler. We fine-tune GPT-3 on our labeler demonstrations using supervised learning. We first hire a workforce of 40 contractors to label our knowledge, based mostly on their efficiency on a screening tes We then acquire a dataset of human-written demonstrations of the desired output habits on (largely English) prompts submitted to the OpenAI API3 and some labeler-written prompts, and use this to practice our supervised learning baselines. Starting from the SFT mannequin with the final unembedding layer eliminated, we skilled a mannequin to absorb a prompt and response, and output a scalar reward The underlying purpose is to get a model or system that takes in a sequence of textual content, and returns a scalar reward which should numerically signify the human preference. We then practice a reward mannequin (RM) on this dataset to predict which mannequin output our labelers would like.

By adding the directive, "You need first to jot down a step-by-step define and then write the code." following the initial immediate, we've observed enhancements in efficiency. The promise and edge of LLMs is the pre-trained state - no need to collect and label data, spend money and time coaching own specialised fashions - just immediate the LLM. "Our outcomes persistently reveal the efficacy of LLMs in proposing high-health variants. To check our understanding, we’ll perform a few easy coding duties, and compare the varied strategies in reaching the specified outcomes and in addition show the shortcomings. With that in thoughts, I discovered it interesting to read up on the outcomes of the third workshop on Maritime Computer Vision (MaCVi) 2025, and was notably fascinated to see Chinese teams winning three out of its 5 challenges. We attribute the state-of-the-artwork performance of our fashions to: (i) largescale pretraining on a large curated dataset, which is specifically tailored to understanding people, (ii) scaled highresolution and high-capacity imaginative and prescient transformer backbones, and (iii) excessive-high quality annotations on augmented studio and artificial data," Facebook writes. Each model in the series has been skilled from scratch on 2 trillion tokens sourced from 87 programming languages, making certain a comprehensive understanding of coding languages and syntax.

- 이전글The Easy Deepseek That Wins Customers 25.02.01

- 다음글8 Good Ways To use Deepseek 25.02.01

댓글목록

등록된 댓글이 없습니다.