3 Nontraditional Deepseek Techniques That are Unlike Any You've Ever S…

페이지 정보

본문

With a concentrate on protecting clients from reputational, economic and political harm, DeepSeek uncovers rising threats and risks, and delivers actionable intelligence to help information shoppers by means of difficult conditions. "A lot of other corporations focus solely on knowledge, but DeepSeek stands out by incorporating the human component into our evaluation to create actionable methods. Making sense of large data, the deep internet, and the dark net Making info accessible by a mix of slicing-edge know-how and human capital. With an unmatched level of human intelligence experience, DeepSeek uses state-of-the-art web intelligence expertise to monitor the darkish internet and deep net, and establish potential threats before they could cause damage. With the bank’s status on the road and the potential for ensuing financial loss, we knew that we would have liked to act rapidly to prevent widespread, lengthy-time period damage. DeepSeek's hiring preferences target technical talents quite than work experience, leading to most new hires being both recent university graduates or builders whose A.I.

We additional conduct supervised tremendous-tuning (SFT) and Direct Preference Optimization (DPO) on DeepSeek LLM Base models, resulting within the creation of DeepSeek Chat fashions. The Chat variations of the 2 Base fashions was also released concurrently, obtained by coaching Base by supervised finetuning (SFT) followed by direct policy optimization (DPO). Furthermore, open-ended evaluations reveal that DeepSeek LLM 67B Chat exhibits superior performance compared to GPT-3.5. From 1 and 2, you should now have a hosted LLM model operating. Our evaluation outcomes show that DeepSeek LLM 67B surpasses LLaMA-2 70B on varied benchmarks, significantly within the domains of code, arithmetic, and reasoning. CodeLlama: - Generated an incomplete perform that aimed to process a list of numbers, filtering out negatives and squaring the results. To support a broader and extra diverse range of research within both academic and industrial communities, we're providing entry to the intermediate checkpoints of the bottom model from its coaching process. After weeks of targeted monitoring, we uncovered a much more vital menace: a notorious gang had begun buying and sporting the company’s uniquely identifiable apparel and using it as a symbol of gang affiliation, posing a big threat to the company’s picture by way of this damaging affiliation.

We additional conduct supervised tremendous-tuning (SFT) and Direct Preference Optimization (DPO) on DeepSeek LLM Base models, resulting within the creation of DeepSeek Chat fashions. The Chat variations of the 2 Base fashions was also released concurrently, obtained by coaching Base by supervised finetuning (SFT) followed by direct policy optimization (DPO). Furthermore, open-ended evaluations reveal that DeepSeek LLM 67B Chat exhibits superior performance compared to GPT-3.5. From 1 and 2, you should now have a hosted LLM model operating. Our evaluation outcomes show that DeepSeek LLM 67B surpasses LLaMA-2 70B on varied benchmarks, significantly within the domains of code, arithmetic, and reasoning. CodeLlama: - Generated an incomplete perform that aimed to process a list of numbers, filtering out negatives and squaring the results. To support a broader and extra diverse range of research within both academic and industrial communities, we're providing entry to the intermediate checkpoints of the bottom model from its coaching process. After weeks of targeted monitoring, we uncovered a much more vital menace: a notorious gang had begun buying and sporting the company’s uniquely identifiable apparel and using it as a symbol of gang affiliation, posing a big threat to the company’s picture by way of this damaging affiliation.

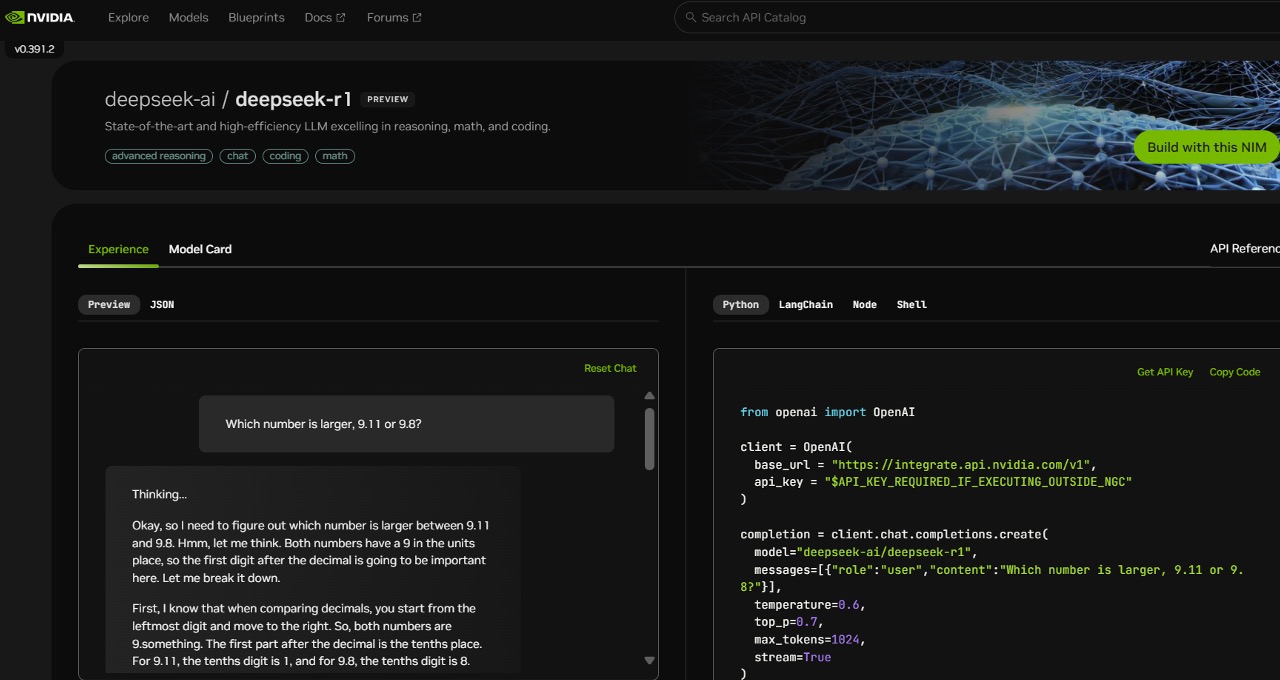

deepseek ai-R1-Distill fashions are effective-tuned based on open-supply fashions, utilizing samples generated by DeepSeek-R1. "If they’d spend extra time working on the code and reproduce the DeepSeek concept theirselves will probably be higher than speaking on the paper," Wang added, utilizing an English translation of a Chinese idiom about people who have interaction in idle speak. The publish-training aspect is less modern, however gives extra credence to these optimizing for online RL coaching as DeepSeek did this (with a type of Constitutional AI, as pioneered by Anthropic)4. Training data: Compared to the original DeepSeek-Coder, DeepSeek-Coder-V2 expanded the coaching information considerably by adding a further 6 trillion tokens, increasing the entire to 10.2 trillion tokens. DeepSeekMoE is carried out in probably the most powerful DeepSeek models: DeepSeek V2 and DeepSeek-Coder-V2. DeepSeek-Coder-6.7B is amongst DeepSeek Coder series of massive code language models, pre-educated on 2 trillion tokens of 87% code and 13% pure language textual content. We delve into the examine of scaling legal guidelines and present our distinctive findings that facilitate scaling of massive scale fashions in two generally used open-supply configurations, 7B and 67B. Guided by the scaling laws, we introduce DeepSeek LLM, a mission dedicated to advancing open-supply language models with an extended-term perspective.

Warschawski delivers the experience and experience of a big firm coupled with the personalized attention and care of a boutique company. Small Agency of the Year" and the "Best Small Agency to Work For" within the U.S. Small Agency of the Year" for 3 years in a row. The CEO of a significant athletic clothes model announced public assist of a political candidate, and forces who opposed the candidate began together with the name of the CEO in their unfavorable social media campaigns. Warschawski is devoted to providing clients with the best high quality of marketing, Advertising, Digital, Public Relations, Branding, Creative Design, Web Design/Development, Social Media, and Strategic Planning providers. Warschawski has received the highest recognition of being named "U.S. For ten consecutive years, it additionally has been ranked as one among the top 30 "Best Agencies to Work For" in the U.S. LLaMa all over the place: The interview also supplies an oblique acknowledgement of an open secret - a large chunk of other Chinese AI startups and main firms are just re-skinning Facebook’s LLaMa models. A European football league hosted a finals game at a big stadium in a significant European city.

If you loved this article and you would like to obtain even more facts concerning ديب سيك kindly browse through our own page.

- 이전글Deepseek Features 25.02.01

- 다음글Is It Time To speak More ABout Deepseek? 25.02.01

댓글목록

등록된 댓글이 없습니다.