Who's Deepseek?

페이지 정보

본문

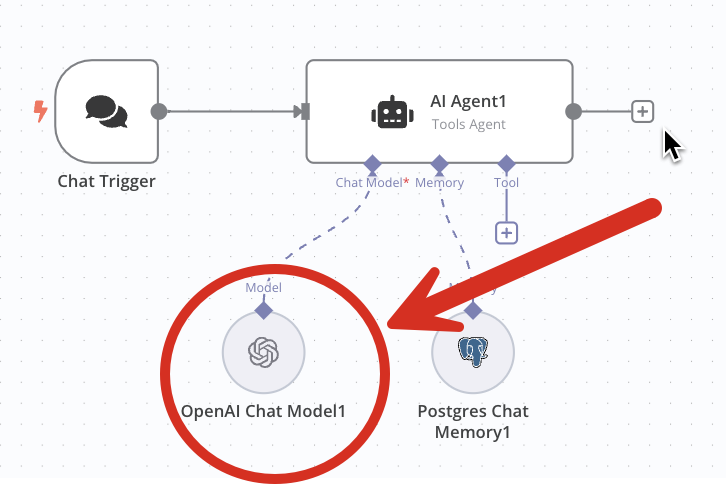

KEY atmosphere variable with your free deepseek API key. API. It is usually production-prepared with help for caching, fallbacks, retries, timeouts, loadbalancing, and will be edge-deployed for minimal latency. We already see that development with Tool Calling fashions, nonetheless if you have seen latest Apple WWDC, you can think of usability of LLMs. As we've got seen throughout the weblog, it has been really thrilling times with the launch of those 5 powerful language models. In this weblog, we'll discover how generative AI is reshaping developer productivity and redefining the entire software improvement lifecycle (SDLC). How Generative AI is impacting Developer Productivity? Over the years, I've used many developer instruments, developer productiveness tools, and normal productiveness tools like Notion and so forth. Most of those instruments, have helped get better at what I wished to do, introduced sanity in a number of of my workflows. Smarter Conversations: LLMs getting better at understanding and responding to human language. Imagine, I've to shortly generate a OpenAPI spec, at the moment I can do it with one of the Local LLMs like Llama utilizing Ollama. Turning small models into reasoning models: "To equip more efficient smaller fashions with reasoning capabilities like DeepSeek-R1, we immediately advantageous-tuned open-supply models like Qwen, and Llama using the 800k samples curated with DeepSeek-R1," DeepSeek write.

Detailed Analysis: Provide in-depth financial or technical evaluation using structured knowledge inputs. Coming from China, DeepSeek's technical improvements are turning heads in Silicon Valley. Today, they're massive intelligence hoarders. Nvidia has launched NemoTron-four 340B, a family of fashions designed to generate artificial information for training large language models (LLMs). Another significant benefit of NemoTron-four is its positive environmental impact. NemoTron-four also promotes fairness in AI. Click here to entry Mistral AI. Here are some examples of how to use our model. And as advances in hardware drive down costs and algorithmic progress will increase compute efficiency, smaller models will more and more access what at the moment are thought-about dangerous capabilities. In other phrases, you take a bunch of robots (here, some relatively easy Google bots with a manipulator arm and eyes and mobility) and give them entry to an enormous mannequin. deepseek ai LLM is a sophisticated language mannequin obtainable in both 7 billion and 67 billion parameters. Let be parameters. The parabola intersects the road at two factors and . The paper attributes the model's mathematical reasoning talents to 2 key elements: leveraging publicly available web data and introducing a novel optimization approach called Group Relative Policy Optimization (GRPO).

Detailed Analysis: Provide in-depth financial or technical evaluation using structured knowledge inputs. Coming from China, DeepSeek's technical improvements are turning heads in Silicon Valley. Today, they're massive intelligence hoarders. Nvidia has launched NemoTron-four 340B, a family of fashions designed to generate artificial information for training large language models (LLMs). Another significant benefit of NemoTron-four is its positive environmental impact. NemoTron-four also promotes fairness in AI. Click here to entry Mistral AI. Here are some examples of how to use our model. And as advances in hardware drive down costs and algorithmic progress will increase compute efficiency, smaller models will more and more access what at the moment are thought-about dangerous capabilities. In other phrases, you take a bunch of robots (here, some relatively easy Google bots with a manipulator arm and eyes and mobility) and give them entry to an enormous mannequin. deepseek ai LLM is a sophisticated language mannequin obtainable in both 7 billion and 67 billion parameters. Let be parameters. The parabola intersects the road at two factors and . The paper attributes the model's mathematical reasoning talents to 2 key elements: leveraging publicly available web data and introducing a novel optimization approach called Group Relative Policy Optimization (GRPO).

Llama three 405B used 30.8M GPU hours for coaching relative to DeepSeek V3’s 2.6M GPU hours (more data within the Llama three mannequin card). Generating synthetic data is more resource-environment friendly in comparison with conventional training methods. 0.9 per output token compared to GPT-4o's $15. As developers and enterprises, pickup Generative AI, I only expect, extra solutionised fashions in the ecosystem, may be more open-source too. However, with Generative AI, it has become turnkey. Personal Assistant: Future LLMs may be capable to handle your schedule, remind you of essential occasions, and even aid you make selections by offering useful info. This model is a blend of the spectacular Hermes 2 Pro and Meta's Llama-three Instruct, resulting in a powerhouse that excels basically tasks, conversations, and even specialised capabilities like calling APIs and generating structured JSON data. It helps you with basic conversations, finishing specific tasks, or dealing with specialised functions. Whether it's enhancing conversations, generating creative content, or offering detailed evaluation, these models actually creates a big impact. It also highlights how I expect Chinese firms to deal with issues just like the affect of export controls - by constructing and refining environment friendly techniques for doing large-scale AI coaching and sharing the details of their buildouts overtly.

At Portkey, we're serving to developers constructing on LLMs with a blazing-quick AI Gateway that helps with resiliency options like Load balancing, fallbacks, semantic-cache. A Blazing Fast AI Gateway. The reward for DeepSeek-V2.5 follows a still ongoing controversy round HyperWrite’s Reflection 70B, which co-founder and CEO Matt Shumer claimed on September 5 was the "the world’s high open-supply AI mannequin," in keeping with his inside benchmarks, solely to see those claims challenged by independent researchers and the wider AI research community, who have to this point failed to reproduce the stated outcomes. There’s some controversy of DeepSeek training on outputs from OpenAI models, which is forbidden to "competitors" in OpenAI’s phrases of service, but that is now harder to show with how many outputs from ChatGPT are now generally accessible on the internet. Instead of simply passing in the present file, the dependent files within repository are parsed. This repo comprises GGUF format mannequin information for deepseek ai's Deepseek Coder 1.3B Instruct. Step 3: Concatenating dependent recordsdata to form a single instance and make use of repo-stage minhash for deduplication. Downloaded over 140k times in every week.

At Portkey, we're serving to developers constructing on LLMs with a blazing-quick AI Gateway that helps with resiliency options like Load balancing, fallbacks, semantic-cache. A Blazing Fast AI Gateway. The reward for DeepSeek-V2.5 follows a still ongoing controversy round HyperWrite’s Reflection 70B, which co-founder and CEO Matt Shumer claimed on September 5 was the "the world’s high open-supply AI mannequin," in keeping with his inside benchmarks, solely to see those claims challenged by independent researchers and the wider AI research community, who have to this point failed to reproduce the stated outcomes. There’s some controversy of DeepSeek training on outputs from OpenAI models, which is forbidden to "competitors" in OpenAI’s phrases of service, but that is now harder to show with how many outputs from ChatGPT are now generally accessible on the internet. Instead of simply passing in the present file, the dependent files within repository are parsed. This repo comprises GGUF format mannequin information for deepseek ai's Deepseek Coder 1.3B Instruct. Step 3: Concatenating dependent recordsdata to form a single instance and make use of repo-stage minhash for deduplication. Downloaded over 140k times in every week.

- 이전글It Cost Approximately 200 Million Yuan 25.02.01

- 다음글영화의 감동: 화면 속의 인생 교훈 25.02.01

댓글목록

등록된 댓글이 없습니다.