9 Things You Possibly can Learn From Buddhist Monks About Deepseek

페이지 정보

본문

To ensure unbiased and thorough performance assessments, DeepSeek AI designed new downside sets, such as the Hungarian National High-School Exam and Google’s instruction following the analysis dataset. The evaluation outcomes display that the distilled smaller dense models carry out exceptionally properly on benchmarks. They’ve acquired the intuitions about scaling up fashions. Its latest model was launched on 20 January, rapidly impressing AI experts before it got the attention of all the tech trade - and the world. Its V3 mannequin raised some awareness about the corporate, though its content material restrictions round delicate matters concerning the Chinese government and its leadership sparked doubts about its viability as an business competitor, the Wall Street Journal reported. These packages once more learn from large swathes of data, including on-line text and pictures, to have the ability to make new content material. AI can, at instances, make a computer seem like a person. By 27 January 2025 the app had surpassed ChatGPT as the best-rated free app on the iOS App Store within the United States; its chatbot reportedly answers questions, solves logic issues and writes pc packages on par with different chatbots on the market, based on benchmark tests used by American A.I. Milmo, Dan; Hawkins, Amy; Booth, Robert; Kollewe, Julia (28 January 2025). "'Sputnik moment': $1tn wiped off US stocks after Chinese firm unveils AI chatbot" - through The Guardian.

To ensure unbiased and thorough performance assessments, DeepSeek AI designed new downside sets, such as the Hungarian National High-School Exam and Google’s instruction following the analysis dataset. The evaluation outcomes display that the distilled smaller dense models carry out exceptionally properly on benchmarks. They’ve acquired the intuitions about scaling up fashions. Its latest model was launched on 20 January, rapidly impressing AI experts before it got the attention of all the tech trade - and the world. Its V3 mannequin raised some awareness about the corporate, though its content material restrictions round delicate matters concerning the Chinese government and its leadership sparked doubts about its viability as an business competitor, the Wall Street Journal reported. These packages once more learn from large swathes of data, including on-line text and pictures, to have the ability to make new content material. AI can, at instances, make a computer seem like a person. By 27 January 2025 the app had surpassed ChatGPT as the best-rated free app on the iOS App Store within the United States; its chatbot reportedly answers questions, solves logic issues and writes pc packages on par with different chatbots on the market, based on benchmark tests used by American A.I. Milmo, Dan; Hawkins, Amy; Booth, Robert; Kollewe, Julia (28 January 2025). "'Sputnik moment': $1tn wiped off US stocks after Chinese firm unveils AI chatbot" - through The Guardian.

The pipeline incorporates two RL phases aimed toward discovering improved reasoning patterns and aligning with human preferences, in addition to two SFT levels that serve because the seed for the model's reasoning and non-reasoning capabilities. To handle these issues and further improve reasoning efficiency, we introduce DeepSeek-R1, which contains chilly-start knowledge earlier than RL. The open source DeepSeek-R1, in addition to its API, will benefit the research neighborhood to distill better smaller models sooner or later. Notably, it's the first open analysis to validate that reasoning capabilities of LLMs will be incentivized purely through RL, without the need for SFT. But now that deepseek - click through the next internet site --R1 is out and accessible, together with as an open weight launch, all these forms of management have change into moot. DeepSeek-R1-Distill-Qwen-1.5B, DeepSeek-R1-Distill-Qwen-7B, DeepSeek-R1-Distill-Qwen-14B and DeepSeek-R1-Distill-Qwen-32B are derived from Qwen-2.5 series, which are originally licensed below Apache 2.0 License, and now finetuned with 800k samples curated with DeepSeek-R1. Nevertheless it positive makes me surprise simply how much cash Vercel has been pumping into the React workforce, what number of members of that group it stole and the way that affected the React docs and the crew itself, either immediately or by "my colleague used to work right here and now could be at Vercel they usually keep telling me Next is great".

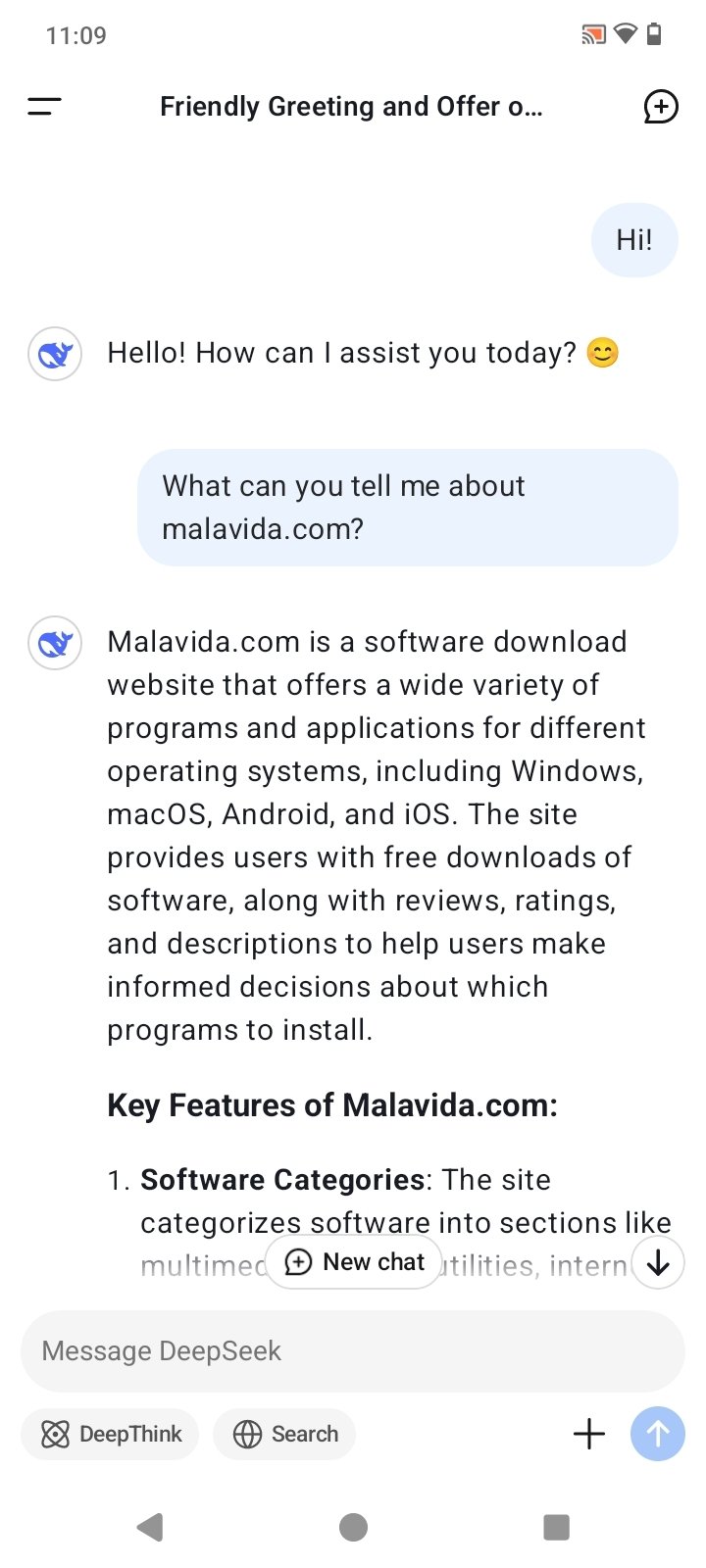

DeepSeek is the name of a free AI-powered chatbot, which appears to be like, feels and works very very similar to ChatGPT. Millions of individuals use instruments similar to ChatGPT to help them with on a regular basis tasks like writing emails, summarising text, and answering questions - and others even use them to assist with fundamental coding and learning. The implementation illustrated the usage of sample matching and recursive calls to generate Fibonacci numbers, with primary error-checking. Be careful with DeepSeek, Australia says - so is it secure to make use of? Please use our setting to run these fashions. DeepSeek-R1-Distill models might be utilized in the identical method as Qwen or Llama models. Chinese corporations growing the same technologies. You must understand that Tesla is in a better place than the Chinese to take advantage of new techniques like these utilized by DeepSeek. What makes DeepSeek so particular is the company's declare that it was built at a fraction of the price of industry-main fashions like OpenAI - because it uses fewer advanced chips. Read the research paper: AUTORT: EMBODIED Foundation Models For large SCALE ORCHESTRATION OF ROBOTIC Agents (GitHub, PDF).

Cerebras FLOR-6.3B, Allen AI OLMo 7B, Google TimesFM 200M, AI Singapore Sea-Lion 7.5B, ChatDB Natural-SQL-7B, Brain GOODY-2, Alibaba Qwen-1.5 72B, Google DeepMind Gemini 1.5 Pro MoE, Google DeepMind Gemma 7B, Reka AI Reka Flash 21B, Reka AI Reka Edge 7B, Apple Ask 20B, Reliance Hanooman 40B, Mistral AI Mistral Large 540B, Mistral AI Mistral Small 7B, ByteDance 175B, ByteDance 530B, HF/ServiceNow StarCoder 2 15B, HF Cosmo-1B, SambaNova Samba-1 1.4T CoE. We demonstrate that the reasoning patterns of larger fashions might be distilled into smaller models, leading to higher efficiency compared to the reasoning patterns discovered by RL on small models. This approach allows the mannequin to explore chain-of-thought (CoT) for fixing complex problems, resulting in the development of DeepSeek-R1-Zero. A machine makes use of the know-how to be taught and solve issues, sometimes by being skilled on massive quantities of knowledge and recognising patterns. Reinforcement studying is a sort of machine learning where an agent learns by interacting with an atmosphere and receiving feedback on its actions.

- 이전글다시 일어서다: 어려움을 이겨내는 힘 25.02.01

- 다음글Pocket Option 是一個流行的二元期權交易平台 25.02.01

댓글목록

등록된 댓글이 없습니다.