Deepseek Chatgpt - Learn how to Be More Productive?

페이지 정보

본문

The corporate claims its R1 release provides efficiency on par with the most recent iteration of ChatGPT. Unable to depend on the most recent chips, DeepSeek and others have been pressured to do extra with much less and with ingenuity as a substitute of brute pressure. It also focuses attention on US export curbs of such superior semiconductors to China - which were supposed to forestall a breakthrough of the sort that DeepSeek seems to represent. For the growing chorus of people involved with the environmental impact of generative AI - one ChatGPT query requires nearly 10 instances as much power as a Google search - the fact that DeepSeek’s breakthrough makes use of considerably much less computing power than U.S.-created choices is a welcome development. DeepSeek’s emergence might provide a counterpoint to the widespread belief that the future of AI will require ever-growing quantities of computing power and energy. The openness of the development course of encourages various contributions, making it attainable for underrepresented teams to form the way forward for AI. Unlocking AI’s true potential: is the future closer than we predict? Countries exterior of the AI superpowers or properly-established tech hubs now have a shot at unlocking a wave of innovation utilizing reasonably priced coaching methods. We’ve entered an period of AI competitors where the tempo of innovation is likely to become rather more frenetic than all of us expect, and where more small players and center powers might be getting into the fray, utilizing the coaching methods shared by DeepSeek.

In our post, we’ve proven how we carried out efficient MoE training by means of Pytorch Distributed and MegaBlocks on Foundry. DeepSeek has reported that the ultimate training run of a previous iteration of the mannequin that R1 is constructed from, launched final month, value less than $6 million. DistRL is designed to help practice fashions that learn to take actions on computers and is designed in order that centralized mannequin training occurs on a giant blob of compute, whereas data acquisition happens on edge devices operating, on this case, Android. Though not fully detailed by the company, the price of training and developing DeepSeek’s fashions seems to be solely a fraction of what’s required for OpenAI or Meta Platforms Inc.’s finest products. DeepSeek, a Chinese synthetic-intelligence startup that’s simply over a yr previous, has stirred awe and consternation in Silicon Valley after demonstrating AI models that provide comparable performance to the world’s greatest chatbots at seemingly a fraction of their growth cost. Competitive benchmark assessments have proven that the performance of those Chinese open supply models are on par with the most effective closed supply Western models.

In our post, we’ve proven how we carried out efficient MoE training by means of Pytorch Distributed and MegaBlocks on Foundry. DeepSeek has reported that the ultimate training run of a previous iteration of the mannequin that R1 is constructed from, launched final month, value less than $6 million. DistRL is designed to help practice fashions that learn to take actions on computers and is designed in order that centralized mannequin training occurs on a giant blob of compute, whereas data acquisition happens on edge devices operating, on this case, Android. Though not fully detailed by the company, the price of training and developing DeepSeek’s fashions seems to be solely a fraction of what’s required for OpenAI or Meta Platforms Inc.’s finest products. DeepSeek, a Chinese synthetic-intelligence startup that’s simply over a yr previous, has stirred awe and consternation in Silicon Valley after demonstrating AI models that provide comparable performance to the world’s greatest chatbots at seemingly a fraction of their growth cost. Competitive benchmark assessments have proven that the performance of those Chinese open supply models are on par with the most effective closed supply Western models.

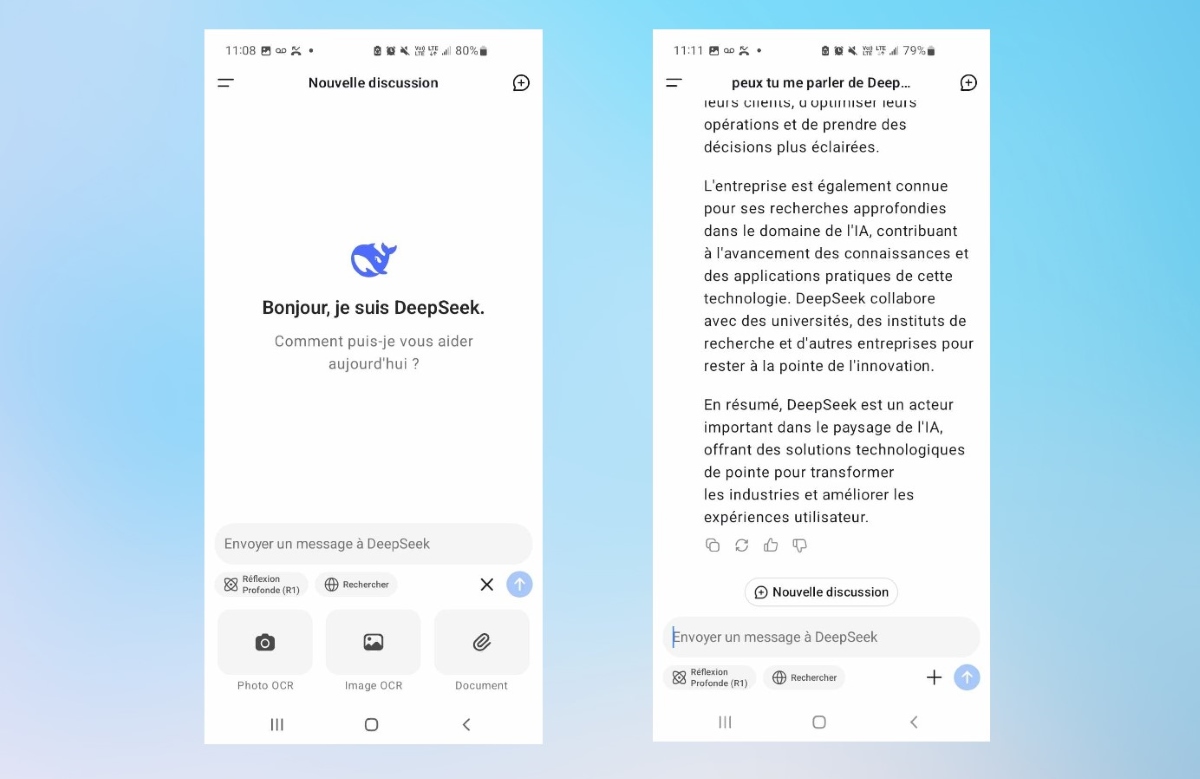

DeepSeek says R1’s performance approaches or improves on that of rival models in several main benchmarks such as AIME 2024 for mathematical tasks, MMLU for general information and ما هو ديب سيك AlpacaEval 2.0 for question-and-answer efficiency. DeepSeek is a complicated open-source AI training language model that goals to course of vast quantities of information and generate correct, excessive-quality language outputs inside particular domains resembling schooling, coding, or research. Training Efficiency: The mannequin was fine-tuned utilizing superior reinforcement studying methods, incorporating human feedback (RLHF) for precise output era. The AI developer has been closely watched since the discharge of its earliest mannequin in 2023. Then in November, it gave the world a glimpse of its DeepSeek R1 reasoning model, designed to mimic human thinking. Meta Introduces Spirit LM open supply mannequin that combines textual content and speech inputs/outputs. Two, China is turning into the worldwide leader in open supply AI. So have newer AI startups like Minimax, which additionally launched in January a series of open supply fashions (both foundational and multimodal, that is, capable of handle multiple types of media). China’s Big Tech big Alibaba has made Qwen, its flagship AI foundation model, open source. Instead, he focused on PhD students from China’s top universities, including Peking University and Tsinghua University, who had been eager to show themselves.

If Western efforts to hamper or handicap China’s AI progress is prone to be futile, then the real race has solely just begun: lean, inventive engineering might be what wins the game; not sheer financial heft and export controls. But then in a flash, all the things modified- the honeymoon phase ended. The success of the study has the potential to redefine the prevailing, $600 million business dedicated to serving to software engineers discover and fix bugs. AI shouldn’t anticipate customers to ask about ethical implications, it should analyze potential moral issues upfront. They’re nationwide safety issues. I might ship a immediate to the AI like, ‘what are five good and dangerous issues about biotech? The app distinguishes itself from other chatbots like OpenAI’s ChatGPT by articulating its reasoning before delivering a response to a immediate. This app can provide help to higher perceive an entire load of different topics from science like biology and physics, to math essentials like algebra and trigonometry, and even poetry for your English lessons. Smart move. The CCP is even much less reliable than the CDC. It was a daring transfer by China to determine diplomatic and commerce relations with international lands, whereas exploring overseas alternatives.

If you cherished this posting and you would like to obtain much more details pertaining to ديب سيك kindly go to the web site.

- 이전글Deepseek Ai 2.Zero - The next Step 25.02.06

- 다음글평화로운 자연: 산과 숲의 풍경 25.02.06

댓글목록

등록된 댓글이 없습니다.