7 Simple Suggestions For Using Deepseek Ai To Get Forward Your Competi…

페이지 정보

본문

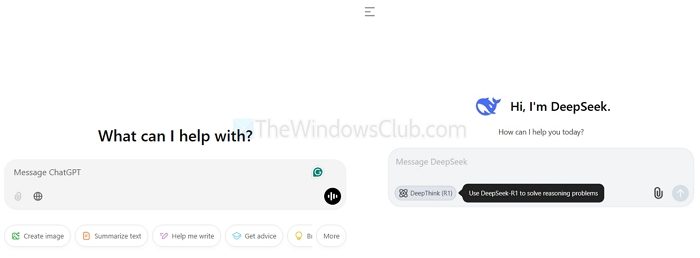

While ChatGPT stays a family title, DeepSeek’s resource-environment friendly structure and domain-particular prowess make it a superior choice for technical workflows. It makes use of a full transformer architecture with some changes (put up-layer-normalisation with DeepNorm, rotary embeddings). Additionally, it uses advanced methods comparable to Chain of Thought (CoT) to improve reasoning capabilities. Here’s a fast thought experiment for you: Let’s say you may add a chemical to everyone’s meals to save countless lives, however the stipulation is that you just could not inform anybody. Quick Deployment: Thanks to ChatGPT’s pre-educated fashions and consumer-friendly APIs, integration into present techniques can be dealt with in a short time. Quick response occasions improve person experience, resulting in increased engagement and retention rates. Winner: DeepSeek supplies a extra nuanced and informative response in regards to the Goguryeo controversy. It has additionally brought on controversy. Then, in 2023, Liang, who has a grasp's diploma in laptop science, determined to pour the fund’s sources into a brand new firm referred to as DeepSeek that will construct its own slicing-edge fashions-and hopefully develop synthetic basic intelligence. While approaches for adapting fashions to talk-setting were developed in 2022 and earlier than, broad adoption of these methods really took off in 2023, emphasizing the rising use of those chat fashions by the general public as nicely because the growing manual evaluation of the fashions by chatting with them ("vibe-verify" analysis).

The Pythia models have been launched by the open-source non-profit lab Eleuther AI, and have been a set of LLMs of various sizes, skilled on completely public knowledge, provided to assist researchers to grasp the totally different steps of LLM training. The tech-heavy Nasdaq plunged by 3.1% and the broader S&P 500 fell 1.5%. The Dow, boosted by health care and shopper firms that may very well be hurt by AI, was up 289 points, or about 0.7% greater. Despite these advancements, the rise of Chinese AI firms has not been free from scrutiny. However, its data storage practices in China have sparked considerations about privacy and national security, echoing debates around other Chinese tech firms. LAION (a non profit open source lab) launched the Open Instruction Generalist (OIG) dataset, 43M directions each created with information augmentation and compiled from other pre-existing data sources. The performance of these fashions was a step forward of earlier models both on open leaderboards like the Open LLM leaderboard and a few of the most troublesome benchmarks like Skill-Mix. Reinforcement learning from human suggestions (RLHF) is a specific method that goals to align what the mannequin predicts to what humans like finest (depending on specific criteria).

GPT-4, which is anticipated to be educated on one hundred trillion machine studying parameters and may go beyond mere textual outputs. Two bilingual English-Chinese model sequence have been launched: Qwen, from Alibaba, fashions of 7 to 70B parameters skilled on 2.4T tokens, and Yi, from 01-AI, fashions of 6 to 34B parameters, skilled on 3T tokens. Their very own model, Chinchilla (not open source), was a 70B parameters model (a 3rd of the scale of the above models) but skilled on 1.4T tokens of data (between 3 and four instances extra data). Smaller or extra specialised open LLM Smaller open-supply models were also released, largely for research purposes: Meta launched the Galactica collection, LLM of as much as 120B parameters, pre-trained on 106B tokens of scientific literature, and EleutherAI released the GPT-NeoX-20B model, an entirely open source (structure, weights, data included) decoder transformer model skilled on 500B tokens (utilizing RoPE and some modifications to attention and initialization), to offer a full artifact for scientific investigations.

The largest model of this family is a 175B parameters mannequin trained on 180B tokens of knowledge from principally public sources (books, social information via Reddit, news, Wikipedia, and different numerous internet sources). The 130B parameters model was skilled on 400B tokens of English and Chinese web data (The Pile, Wudao Corpora, and different Chinese corpora). 1T tokens. The small 13B LLaMA mannequin outperformed GPT-three on most benchmarks, and the largest LLaMA model was state of the art when it got here out. The MPT models, which came out a couple of months later, released by MosaicML, were close in performance but with a license allowing commercial use, and the small print of their training combine. The authors came upon that, general, for the average compute price range being spent on LLMs, models must be smaller but skilled on considerably more information. In this perspective, they decided to prepare smaller fashions on even more knowledge and for more steps than was usually achieved, thereby reaching greater performances at a smaller model measurement (the trade-off being training compute effectivity). For extra detailed info, see this weblog submit, the original RLHF paper, or the Anthropic paper on RLHF. These models use a decoder-solely transformers architecture, following the tricks of the GPT-3 paper (a selected weights initialization, pre-normalization), with some changes to the eye mechanism (alternating dense and domestically banded consideration layers).

The largest model of this family is a 175B parameters mannequin trained on 180B tokens of knowledge from principally public sources (books, social information via Reddit, news, Wikipedia, and different numerous internet sources). The 130B parameters model was skilled on 400B tokens of English and Chinese web data (The Pile, Wudao Corpora, and different Chinese corpora). 1T tokens. The small 13B LLaMA mannequin outperformed GPT-three on most benchmarks, and the largest LLaMA model was state of the art when it got here out. The MPT models, which came out a couple of months later, released by MosaicML, were close in performance but with a license allowing commercial use, and the small print of their training combine. The authors came upon that, general, for the average compute price range being spent on LLMs, models must be smaller but skilled on considerably more information. In this perspective, they decided to prepare smaller fashions on even more knowledge and for more steps than was usually achieved, thereby reaching greater performances at a smaller model measurement (the trade-off being training compute effectivity). For extra detailed info, see this weblog submit, the original RLHF paper, or the Anthropic paper on RLHF. These models use a decoder-solely transformers architecture, following the tricks of the GPT-3 paper (a selected weights initialization, pre-normalization), with some changes to the eye mechanism (alternating dense and domestically banded consideration layers).

If you beloved this short article and also you would like to get details with regards to شات DeepSeek kindly visit our web site.

- 이전글바다의 아름다움: 해변과 해양 생태계 25.02.10

- 다음글반려동물과 나: 충실한 친구의 이야기 25.02.10

댓글목록

등록된 댓글이 없습니다.