Turn Your Deepseek Ai Into a High Performing Machine

페이지 정보

본문

An example of limited reminiscence is self-driving vehicles. Limited language support: Amazon Q Developer supports a narrower vary of programming languages compared to its competitors. Infervision, an AI developer in China that created the same system to that of Alibaba, has additionally partnered with CES Descartes Co., شات ديب سيك a Tokyo-based mostly medical AI startup, and obtained authorization from Japan's Ministry of Health, Labor and Welfare in early June to manufacture and promote its product. ChatGPT maker OpenAI. The model was additionally extra cost-efficient, utilizing expensive Nvidia chips to train the system on troves of information. Andrej Karpathy suggests treating your AI questions as asking human data labelers. For the US authorities, DeepSeek’s arrival on the scene raises questions on its technique of making an attempt to contain China’s AI advances by proscribing exports of high-finish chips. However, what’s remarkable is that we’re evaluating one among DeepSeek’s earliest fashions to one of ChatGPT’s advanced models. Gleeking. What’s that, you ask? As an example, it could sometimes generate incorrect or nonsensical solutions and lack actual-time information access, relying solely on pre-current coaching knowledge. The mannequin employs a self-attention mechanism to course of and generate text, permitting it to seize complex relationships within enter knowledge. Let’s now discuss the coaching means of the second model, known as DeepSeek-R1.

An example of limited reminiscence is self-driving vehicles. Limited language support: Amazon Q Developer supports a narrower vary of programming languages compared to its competitors. Infervision, an AI developer in China that created the same system to that of Alibaba, has additionally partnered with CES Descartes Co., شات ديب سيك a Tokyo-based mostly medical AI startup, and obtained authorization from Japan's Ministry of Health, Labor and Welfare in early June to manufacture and promote its product. ChatGPT maker OpenAI. The model was additionally extra cost-efficient, utilizing expensive Nvidia chips to train the system on troves of information. Andrej Karpathy suggests treating your AI questions as asking human data labelers. For the US authorities, DeepSeek’s arrival on the scene raises questions on its technique of making an attempt to contain China’s AI advances by proscribing exports of high-finish chips. However, what’s remarkable is that we’re evaluating one among DeepSeek’s earliest fashions to one of ChatGPT’s advanced models. Gleeking. What’s that, you ask? As an example, it could sometimes generate incorrect or nonsensical solutions and lack actual-time information access, relying solely on pre-current coaching knowledge. The mannequin employs a self-attention mechanism to course of and generate text, permitting it to seize complex relationships within enter knowledge. Let’s now discuss the coaching means of the second model, known as DeepSeek-R1.

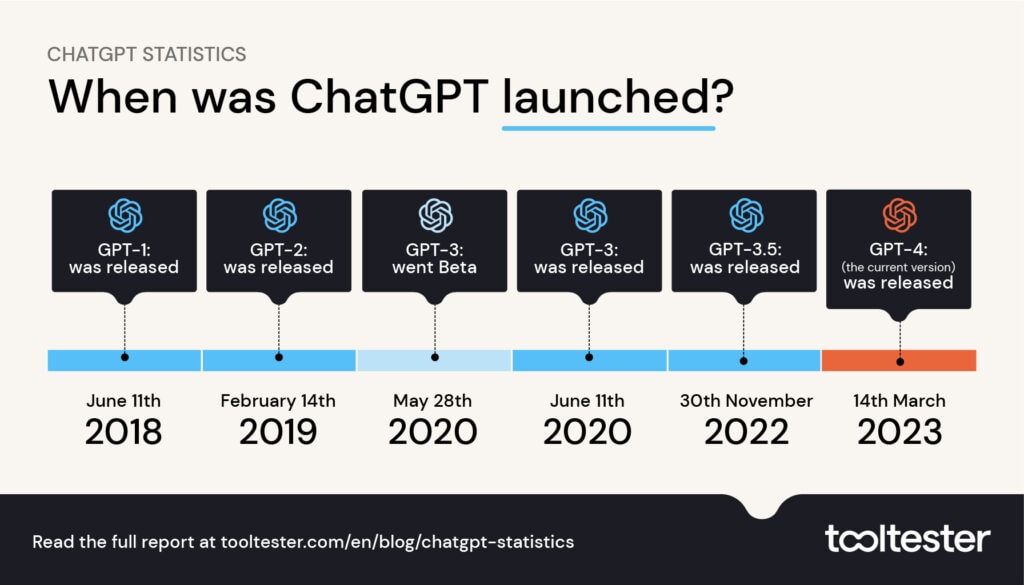

DeepSeek’s MoE architecture allows it to process data extra effectively. Both fashions use different structure types, which also adjustments the best way they perform. It primarily memorized how I exploit an inner software the incorrect manner. If you’re new to ChatGPT, test our article on how to make use of ChatGPT to study more in regards to the AI instrument. Tech firms have mentioned their electricity use is going up, when it was supposed to be ramping down, ruining their carefully-laid plans to handle climate change. With just $5.6 million invested in DeepSeek in comparison with the billions US tech firms are spending on fashions like ChatGPT, Google Gemini, and Meta Llama, the Chinese AI mannequin is a force to be reckoned with. Among the small print that stood out was DeepSeek’s assertion that the cost to prepare the flagship v3 model behind its AI assistant was solely $5.6 million, a stunningly low quantity in comparison with the multiple billions of dollars spent to construct ChatGPT and different effectively-recognized techniques. By Monday, DeepSeek’s AI assistant had surpassed OpenAI’s ChatGPT as Apple’s most-downloaded free app. ChatGPT is a generative AI platform developed by OpenAI in 2022. It makes use of the Generative Pre-trained Transformer (GPT) architecture and is powered by OpenAI’s proprietary giant language fashions (LLMs) GPT-4o and GPT-4o mini.

DeepSeek’s MoE architecture allows it to process data extra effectively. Both fashions use different structure types, which also adjustments the best way they perform. It primarily memorized how I exploit an inner software the incorrect manner. If you’re new to ChatGPT, test our article on how to make use of ChatGPT to study more in regards to the AI instrument. Tech firms have mentioned their electricity use is going up, when it was supposed to be ramping down, ruining their carefully-laid plans to handle climate change. With just $5.6 million invested in DeepSeek in comparison with the billions US tech firms are spending on fashions like ChatGPT, Google Gemini, and Meta Llama, the Chinese AI mannequin is a force to be reckoned with. Among the small print that stood out was DeepSeek’s assertion that the cost to prepare the flagship v3 model behind its AI assistant was solely $5.6 million, a stunningly low quantity in comparison with the multiple billions of dollars spent to construct ChatGPT and different effectively-recognized techniques. By Monday, DeepSeek’s AI assistant had surpassed OpenAI’s ChatGPT as Apple’s most-downloaded free app. ChatGPT is a generative AI platform developed by OpenAI in 2022. It makes use of the Generative Pre-trained Transformer (GPT) architecture and is powered by OpenAI’s proprietary giant language fashions (LLMs) GPT-4o and GPT-4o mini.

Chinese AI agency DeepSeek has released a variety of fashions capable of competing with OpenAI in a move consultants instructed ITPro showcases the energy of open supply AI. Released in January, DeepSeek claims R1 performs as well as OpenAI’s o1 mannequin on key benchmarks. Even though the model launched by Chinese AI firm DeepSeek is sort of new, it's already referred to as an in depth competitor to older AI models like ChatGPT, Perplexity, and Gemini. Just days before DeepSeek filed an software with the US Patent and Trademark Office for its title, an organization called Delson Group swooped in and filed one earlier than it, as reported by TechCrunch. For startups and smaller companies that want to make use of AI but don’t have large budgets for it, DeepSeek R1 is a good choice. The mannequin can ask the robots to perform duties and so they use onboard methods and software (e.g, native cameras and object detectors and movement policies) to help them do this. Users can utilize their own or third-get together local fashions primarily based on Ollama, offering flexibility and customization choices. Its subtle language comprehension capabilities allow it to maintain context across interactions, offering coherent and contextually related responses. As it's skilled on large text-based datasets, ChatGPT can perform a diverse vary of duties, such as answering questions, generating artistic content material, assisting with coding, and offering educational steerage.

However, it’s necessary to note that velocity can vary relying on the particular task and context. Imagine a staff of specialised consultants, every focusing on a specific process. With 175 billion parameters, ChatGPT’s structure ensures that all of its "knowledge" is obtainable for every process. With a staggering 671 billion complete parameters, DeepSeek activates solely about 37 billion parameters for each task - that’s like calling in simply the proper specialists for the job at hand. This means, in contrast to DeepSeek, ChatGPT does not name only the required parameters for a immediate. 3. Prompting the Models - The primary mannequin receives a prompt explaining the desired end result and the supplied schema. The Massive Multitask Language Understanding (MMLU) benchmark exams fashions on a variety of subjects, from humanities to STEM fields. Though both DeepSeek and ChatGPT are AI platforms that use natural language processing (NLP) and machine studying (ML), the way they are educated and built is quite completely different.

Should you loved this post in addition to you desire to get details about ديب سيك kindly pay a visit to our own site.

- 이전글자연의 고요: 숲에서 찾은 평화 25.02.07

- 다음글Your Weakest Link: Use It To Deepseek 25.02.07

댓글목록

등록된 댓글이 없습니다.